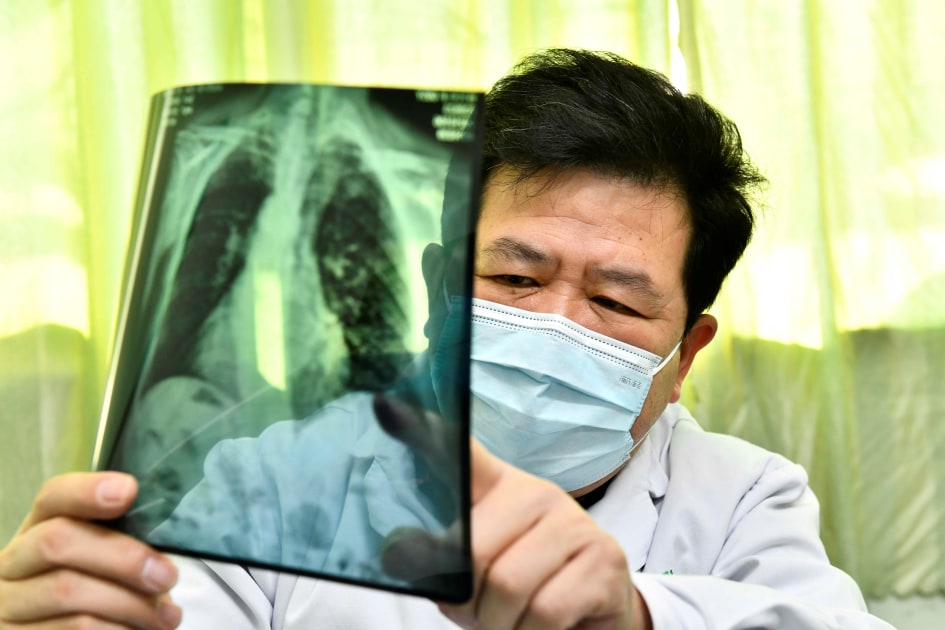

“COVID is a unique virus,” said Dr. William Moore of NYU Langone Health told Engadget. Most viruses attack the respiratory bronchioles, leading to an increased density of pneumonia. “But what you don’t usually see is a huge hazy density.” However, this is exactly what doctors do is finding with COVID patients. ‘They will have an increased density, which is apparently an inflammatory process for pneumonia, rather than a typical bacterial pneumonia, which is a denser area and in one specific location. [COVID] appears to be bilateral; it seems to be somewhat symmetrical. ”

When the outbreak hit New York City for the first time, “we were trying to figure out what to do, how we could help the patients,” Moore continued. So there were a few things going on: a huge number of patients were coming in, and we had to figure out ways to predict what was going to happen. [to them]. ”

To do this, the NYU-FAIR team started with chest photos. As Moore notes, x-rays are performed regularly, mostly when patients complain of shortness of breath or other symptoms of shortness of breath, and are ubiquitous in rural community hospitals and major metropolitan medical centers. The team then developed a series of measures to measure complications, as well as the patient’s progress from admission to the ICU to ventilation, intubation, and potential mortality.

“This is another clearly demonstrable measure we can use,” Moore explained of patient deaths. “Then we said, ‘Okay, let’s see what we can use to predict this,’ and of course the X-ray of the breast was one of the things we thought would be very important.”

After the team determined the necessary criteria, they started training the AI / ML model. However, this posed another challenge. “Because the disease is new and its progression is non-linear,” Facebook AI program manager Nafissa Yakubova, who previously helped NYU develop faster MRIs, told Engadget. “It makes it difficult to make predictions, especially long-term predictions.”

What’s more, at the beginning of the epidemic, ‘we did not have COVID datasets, there were especially no datasets marked. [for use in training an ML model], ”She continues. “And the size of the datasets was also quite small.”

Scott Olson via Getty Images

So the team did the next best thing: they “pre-trained” their model using larger publicly available chest X-ray databases, specifically MIMIC-CXR-JPG and CheXpert, using a self-monitoring learning technique called Momentum Contrast (MoCo).

Basically, like Towards computer science Dipam Vasani explains that when training an AI to recognize specific things – for example dogs – the model must be constructed through a series of phases: first recognizing lines, then basic geometric shapes, and then more detailed patterns, before A husky could tell of a Border Collie. What the FAIR-NYU team did was take the first few phases of their model and train them in advance on the larger datasets of the public, and then go back and refine the model using the smaller , COVID-specific dataset. “We do not make the diagnosis of COVID, whether you have a COVID or not, based on an x-ray,” Yakubova said. “We are trying to predict the progress how serious it could be.”

“The key here, I think, was … the use of a series of images,” she continued. When a patient is admitted, the hospital will take an x-ray in the coming days and then probably take another recording, ‘so you have this time series of images, which was the key to more accurate predictions.’ Once the FAIR-NYU model was fully trained, it was able to achieve 75 percent diagnostic accuracy – at the same level as in some cases the performance of human radiologists.

AGF via Getty Images

This is a smart solution for several reasons. First, the initial pre-training is extremely resource-intensive – to the point that it is simply not feasible for individual hospitals and health centers to do it alone. But using this method, massive organizations like Facebook can and will develop the initial model and then provide it to hospitals as an open-source code, which health care providers can then train with their own datasets and a single GPU.

Second, since the initial models were trained on generalized X-rays rather than COVID-specific data, these models – in theory at least, FAIR has not actually tried them yet – can be adapted to other respiratory diseases by simply exchanging the data used for the fine adjustment out. This will enable healthcare providers to not only model a model for a particular disease, but also to tailor the model to their specific environment and circumstances.

“I think it’s one of the wonderful things the Facebook team has done,” Moore concluded, “to take something that is a great resource – CheXpert and MIMIC databases – and put it on a new and emerging disease process. can apply. that we knew very little when we started doing it, in March and April. ”